My project will be considering the science behind a cappella. I will be studying the waveforms of different voices individually and combined in order to consider the effects of frequency and vowel/phoneme quality on blend. The motivation for this project comes from experience in my a cappella group, Under Construction, in combination with my experience in my phonetics and phonology course.

When my group rehearses our music, one of the most challenging things to do (besides staying in key) is blending with the other members of the group. Even when we’re all singing the correct notes, if someone’s vowel differs from the rest, they stick out, and the piece sounds bad. We need to blend on high notes as well as low notes– basses need to be able to blend with sopranos. Similarly, we need to sound uniform when we’re singing the same vowels/phones, but I wonder if some phonemic sounds are more conducive to blend based solely on their phonetic properties present in the wave forms.

I plan to complete this analysis by first recording members of my a cappella group in Audacity. I will then import files into a phonetics program called Praat, in which I will create graphs of and analyze the formants present in different sounds and how these differ when there are multiple people singing, when those people are or are not attempting to blend, etc.

Some research has been done to scientifically study choral blend. I found an article in the Journal of Research in Music Education called “An Acoustical Study of Individual Voices in Choral Blend” that talks about how singers’ formants differ when they’re singing a solo piece versus trying to blend with a choir (https://doi.org/10.1177/002242948002800205).

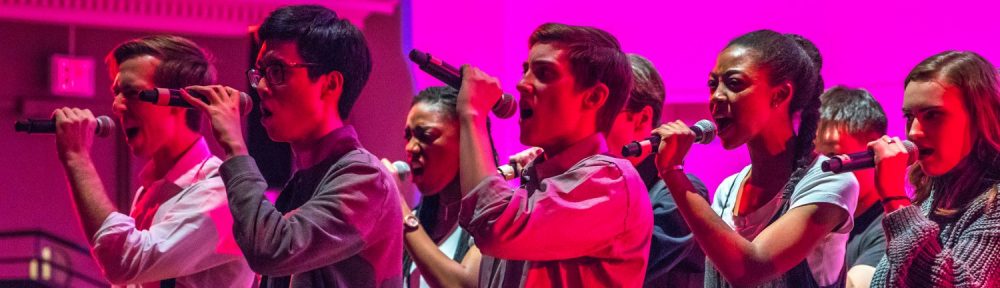

Here is a link of my a cappella performing at a concert at Yale in the hall. This example is especially interesting because we’d never performed in this space before, and the room acoustics were such that it was difficult to hear one another, and this likely had a huge effect on our blend. https://www.youtube.com

This really sounds like a fascinating idea. I think another way you could possibly add complexity to the project and the analysis is to consider the effect that different kind of genres of music have on blend. It would be cool to pick a few specific pieces and contrast them.

You’re totally right! Genres like choral music put a ton of importance on blend because of the desired timbre, whereas pop songs tend to have brighter sounds and wider vowels that are much more challenging to blend. It’s interesting that you mention that, because the same word can be sung differently in different contexts. The word “he” in a pop arrangement likely uses the vowel /i/, while in choral music, it uses /y/, which is the rounded counterpart. It would be fascinating to see if blend is better or worse when the vowels align more with normal human speech (the more pop-like music).

Have you decided what sets of sounds or formants that you’d like to focus on / rigorously analyze? It seems like you’re planning on following the same methods as the paper you linked — are you going to try to build on those results a bit or verify them yourself? I suppose if you know the best ways to blend from a formant perspective, you maybe wouldn’t need to rely as much on hearing others in order to dynamically adjust your own voice to blend.

I’m sill building on my ideas, but as of now I’m expecting to at least analyze the three most phonetically distinct vowels, /i/, /u/, and /a/. I hope to throw in a normally diphthongized vowel as well, such as /o/, in order to see the effects of changing formants. I found the paper after coming up with my basic ideas, so now that I know that someone has investigated almost the exact same problem, I’m thinking of altering it a bit. After today’s awesome presentation on deep learning and song recognition, I’m considering the possibility of attempting to work on some sort of vowel recognition or vowel production algorithm, which would just use the average frequency of different formants in order to differentiate vowels. Great questions!

This seems awesome! I’m wondering if, based on your previous knowledge and on what we’ve discussed in class, are there any particular results that you’re expecting? What would be a result that you might consider surprising?

Great questions! Completely based on my own experience, I tend to hear the best blend when people sing “oo,” and it ends up sounding super resonant and like there’s only one person singing. I think I’d be more surprised to hear good blend on a vowel like long “o” or long “a.” Since English pronounces these as diphthongs, most English speakers naturally alter their first and second formants throughout the duration of these syllables. This would be more difficult to blend because it adds a time component, so singers need to blend not only on the one syllable, but over the syllable change. Based on things we learned in class, I’m guessing that higher voices will blend more to one another in terms of matching pitch, since the human ear isn’t great at differentiating higher-pitched sounds. I’d be surprised if we got really good blend between sopranos and basses, since the stark differences in pitch will likely contribute greatly to the human perception of whether or not the timbre of the sounds matches.

Olivia, this sounds like a great project. It’s totally okay to repeat the analysis found in the paper you are looking at and see what you get, just using the tools available for you and the methods outlined in the paper on your own data is itself a very valuable experience.