What I’ve Been Working On

Since my last blog post, I spent a few hours attempting to work out the code for my project. I perused GitHub and found several users who had created code to integrate parts of Praat with Python. However, some of the best examples were coded for an older version of Python and had not been updated. Additionally, I began to realize that even after my code would begin working, vowel formants differ so much between speakers that I would have to cater the program to my own voice in order for it to work, because catering to any user would be beyond the scope of this project.

Additionally, my original goal was to complete a project with direct implications for my a cappella group, and this program would not beneficial to my group, since we can hear which vowel we’re trying to match, and pitch can be easily detected in realtime using an application like iAudioTool.

What does this mean?

I’ve decided to return to my original idea of analyzing blend in a cappella music based on vowel formants. The following is what I’ve been working on so far and how I’ll be implementing this project this week.

Research question

How does blend differ between choral music and pop music?

Hypothesis

I hypothesize that my participants will show a greater degree of blend to rounded vowels than to unrounded vowels. Since choral music consists of more rounded vowels than pop music, I hypothesize that blending to choral music is articulatorily easier than blending to pop music.

background

As Jim discussed in class, human speech is made up of different formants. A formant is a high amplitude of waves at a certain frequency in a sample of human speech. There are several different formants, ranging from lowest to highest frequencies, and each vowel has a unique distribution of formants in the range of possible frequencies. This frequency distribution is the fingerprint of the vowel, and this is how we hear the difference between vowels. F0 corresponds to the perceived pitch of a vowel, F1 corresponds to vowel height, and F2 corresponds to the backness of a vowel. The phoneme /i/ (the vowel in the English word “feet”) has a low F1 and a high F2, while the phoneme /u/ (the vowel in the English word “you”) has a low F1 and a low F2.

In a cappella music, vowels are extremely important, because when two singers are supposed to be singing the same vowel and they’re forming the sound differently, the differing pronunciations detract immensely from the music. This vowel matching, called blend, is therefore not just a matter of audience perception, but can be considered in light of the aforementioned vowel formants.

As I referenced in a previous blog post, some research has been done to scientifically study choral blend. I found an article in the Journal of Research in Music Education called “An Acoustical Study of Individual Voices in Choral Blend” that talks about how singers’ formants differ when they’re singing a solo piece versus trying to blend with a choir (https://doi.org/10.1177/002242948002800205). This study thoughtfully considers the differences in singers’ vowel formants when singing in a solo style versus in a choral style in which they’re attempting to blend.

experiment

For this study, participants will be asked to sing a series of vowels at a pitch that I will give on the piano app on my phone. In the previously cited experiment, they tested on the vowels [a], [o], [u], [e], and [i]. I will be using the same vowels, except I will be substituting [α] instead of [a], which is a more common English phoneme. As in the study I read about, I will be testing these vowels at 3 different pitches. First they will sing alone. After this recording, I will play a clip of myself singing these same vowels, then play it again and ask the participants to sing along and blend with the recording.

I will also be adding a part to the study in which participants will listen to an audio clip of a song, then are asked to sing along to that song while blending to the singer in the audio. This component was not in the original experiment, and I think it will be interesting to hear the effects on blend of singing actual lyrics besides “oohs” and “ahs.” This part will also give me the opportunity to analyze blends with diphthongs, such as /aI/ and /eI/, the sounds in the English words “high” and “way,” respectively.

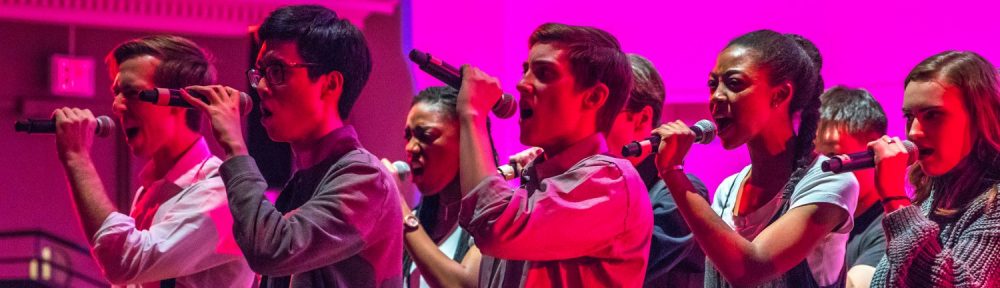

Another fascinating difference in my project is that I will be analyzing both male and female voices, while the referenced study considered only sopranos. This is super applicable for coed groups such as Under Construction, the one I’m part of.

analysis

For each participant, I will use Praat to create a spectrogram of each of the 5 aforementioned vowels, as well as selected vowels from the song the participants sing. I will use the “get formants” feature in Praat to extract the frequency of formants 0 through 3, and I will then enter this data into two .txt files, one for non-blended singing and one for blended singing. The first line of the file will include the measurements of the recording.

I will then use Python in order to analyze this data. I will find the differences in formants between unblended and blended vowels, and between participants and the recording both without and with blending. I will find the mean of these differences and analyze which vowels are the closest to the standard. I will create scatterplots of these data as well using PyLab. Hopefully following this data analysis, I will be able to see which vowels have the greatest potential in blending and can therefore show differences between blending in choral and pop music.

One thing I anticipate is needing to use a metric other than absolute frequency for the formants, since according to what I’ve learned in my phonetics course, F1-F3 may differ between individuals. I’m curious to see whether this holds for singing, when blend is being attempted. If the formants are radically different, I may consider relative frequencies to each participant’s baseline rather than immediately comparing to my prerecording.