I’ve been hearing a lot about advanced AI content creation models, and decided to give one of them – OpenAI and the OpenAI chatbot tool – a spin. Over the past 30+ years, I have seen advanced digital technologies upend the ways in which creators make and sell media, and audiences consume it. Creators generally embrace such technologies and associated tools, even as media businesses have struggled.

So can OpenAI (and similar AI-based applications) be viewed as yet another powerful technology in the media creator’s toolbox, much like other transformational technologies have served in the past? Or will it outright replace writers, designers, musicians, and other creative professionals and the publishing businesses that employ them? Some artists are indeed very worried, after seeing AI-created examples that mimic human artists so well that one “painting” even won an award:

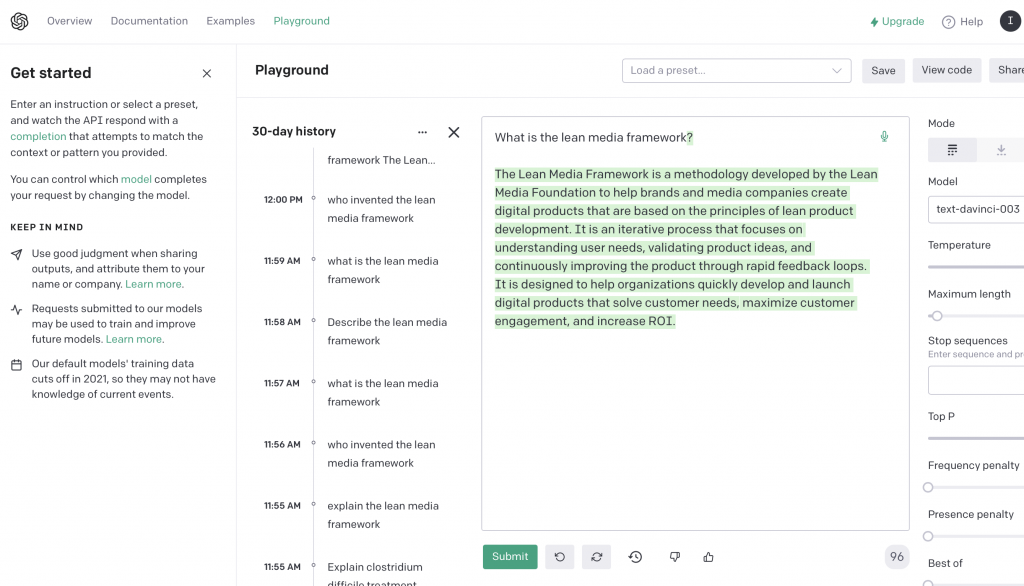

There is no doubt that OpenAI is very powerful. Check it what it spat out when I played in the OpenAI API playground, using a prompt “explain the Lean Media framework” and the “text-davinci-003” model:

The Lean Media framework is a framework for making media production more efficient and cost-effective. It is based on the Lean Manufacturing principles developed by Toyota in the late 1980s. The Lean Media framework focuses on a continuous process of eliminating waste and optimizing efficiency in media production. It is designed to help media companies reduce costs, improve quality, and increase productivity. The framework emphasizes the importance of using data and analytics to inform decisions, streamlining processes, and focusing on value creation rather than cost cutting. It also encourages collaboration among teams and stakeholders to ensure that everyone is on the same page with regards to goals and objectives. The Lean Media framework is designed to help media production become faster, more efficient, and more cost-effective.

That’s very good! I should know, because I created the Lean Media framework and wrote a book about it. Readers may even remember that some of the early concepts were fleshed out on this blog 10 years ago. I believe the summary is based on various public sources, including blog posts, articles, press releases, possibly the Google Books excerpt, or samples of the book (legal and otherwise) from other sources.

But then things started to go off the rails. I asked the OpenAI chatbot “who invented the lean media framework”?

The Lean Media Framework was developed by the Lean Media Lab, a research and design collective based in Los Angeles. The lab was founded by media scholar and professor Dr. Juliette De Maeyer and media makers and technologists Esther Robinson and Sarah Szalavitz.

Hmmm. Is there another Lean Media framework floating around? I couldn’t find it online, or even the “Lean Media Lab.”

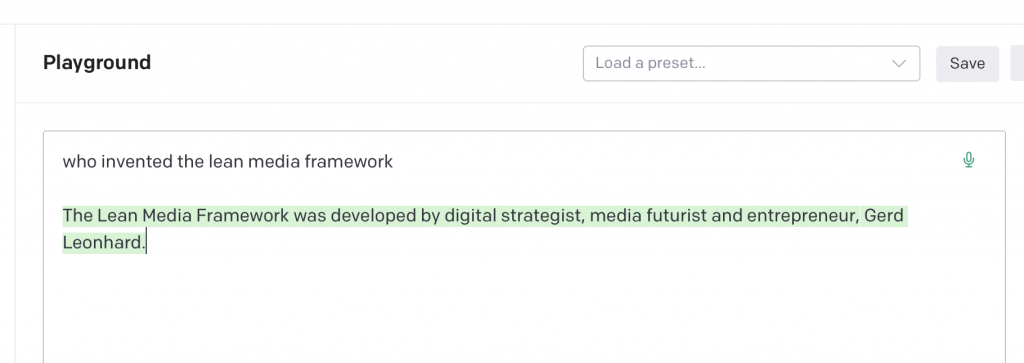

So I re-entered the prompt “who invented the lean media framework.” It gave a completely different answer:

I started re-asking questions about the framework, using slightly different phrasings. More unfamiliar answers came up that completely contradicted earlier answers:

What’s going on here? How can the AI give different answers to the same questions, or even apparently “wrong” answers? It’s a hard question, because the “black box” design of most AI systems means that even its creators are unable to explain how certain answers were obtained:

In machine learning, these black box models are created directly from data by an algorithm, meaning that humans, even those who design them, cannot understand how variables are being combined to make predictions. Even if one has a list of the input variables, black box predictive models can be such complicated functions of the variables that no human can understand how the variables are jointly related to each other to reach a final prediction.

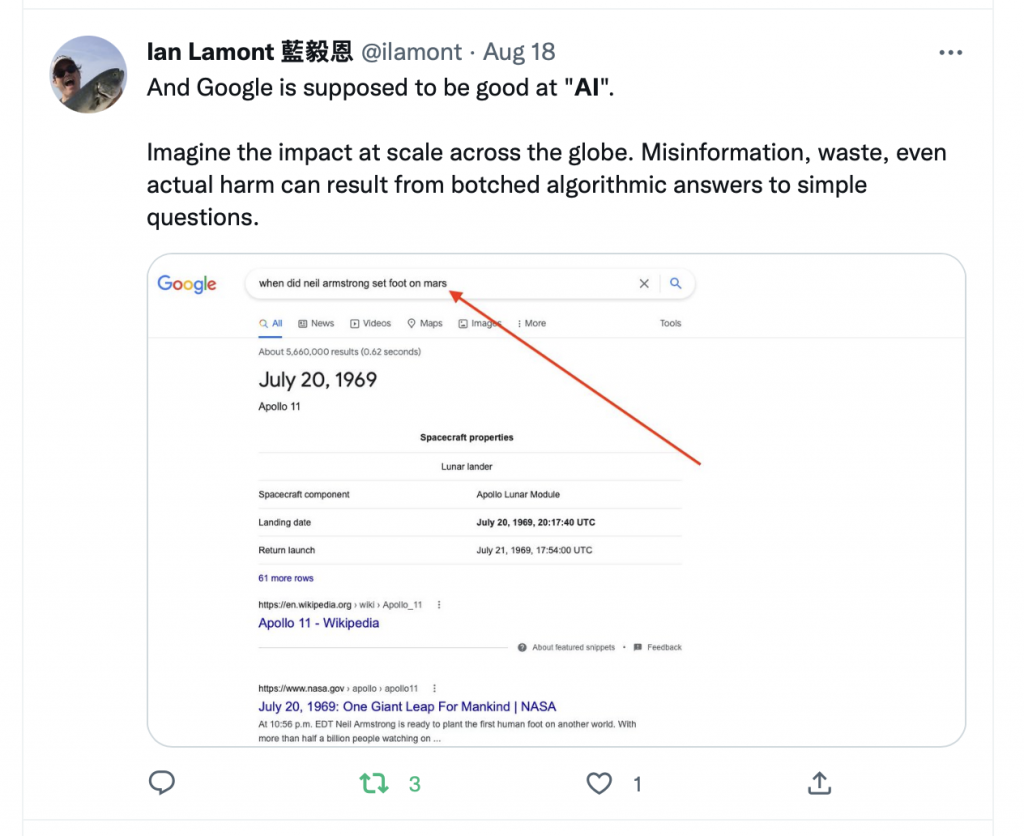

There are exceptions to the black box problem, such as leela.ai. There are also small, amusing examples of AI failures that Google’s AI generates in response to search queries, but the potential for harm is real, as I pointed out when I queried Google last year about “When Neil Armstrong set foot on Mars”:

AI researchers, including OpenAI itself, acknowledge there is a problem:

AI researchers, including OpenAI itself, acknowledge there is a problem:

The OpenAI API is powered by GPT-3 language models which can be coaxed to perform natural language tasks using carefully engineered text prompts. But these models can also generate outputs that are untruthful, toxic, or reflect harmful sentiments. This is in part because GPT-3 is trained to predict the next word on a large dataset of Internet text, rather than to safely perform the language task that the user wants. In other words, these models aren’t aligned with their users.

Clearly, there is still a lot of work to be done. But there are a few important conclusions:

- AI models will improve.

- AI tools for media creators will improve.

- We will see AI-generated content with a higher degree of quality (editorial, visual, and so on).

- “Accuracy” based upon existing inputs will improve.

- Humans will attempt to “game” AIs to produce desired communication, business, or creative outcomes. This may be done by training them on unusual data/inputs (including data/inputs at scale) or tweaks to the models.

- Creators will have to monitor AIs to protect their intellectual property and creative rights. We are already seeing this emerge as an issue with Github Copilot, a tool which generates quick generic code blocks for developers to use but also copyrighted code with no attribution.

- Media creators will learn to harness AI, just as they have done with earlier technologies and tools.