How universities can support open-access journal publishing

June 4th, 2014

To university administrators and librarians:

|

| …enablement becomes transformation… “Shelf of journals” image from Flickr user University of Illinois Library. Used by permission. |

As a university administrator or librarian, you may see the future in open-access journal publishing and may be motivated to help bring that future about.1 I would urge you to establish or maintain an open-access fund to underwrite publication fees for open-access journals, but to do so in a way that follows the principles that underlie the Compact for Open-Access Publishing Equity (COPE). Those principles are two:

Principle 1: Our goal should be to establish an environment in which publishers are enabled2 to change their business model from the unsustainable closed access model based on reader-side fees to a sustainable open access model based on author-side fees.

If publishers could and did switch to the open-access business model, in the long term the moneys saved in reader-side fees would more than cover the author-side fees, with open access added to boot.

But until a large proportion of the funded research comes with appropriately structured funds usable to pay author-side fees, publishers will find themselves in an environment that disincentivizes the move to the preferred business model. Only when the bulk of research comes with funds to pay author-side fees underwriting dissemination will publishers feel comfortable moving to that model. Principle 1 argues for a system where author-side fees for open-access journals should be largely underwritten on behalf of authors, just as the research libraries of the world currently underwrite reader-side fees on behalf of readers.3 But who should be on the hook to pay the author-side fees on behalf of the authors? That brings us to Principle 2.

Principle 2: Dissemination is an intrinsic part of the research process. Those that fund the research should be responsible for funding its dissemination.

Research funding agencies, not universities, should be funding author-side fees for research funded by their grants. There’s no reason for universities to take on that burden on their behalf.4 But universities should fund open-access publication fees for research that they fund themselves.

We don’t usually think of universities as research funders, but they are. They hire faculty to engage in certain core activities – teaching, service, and research – and their job performance and career advancement typically depends on all three. Sometimes researchers obtain outside funding for the research aspect of their professional lives, but where research is not funded from outside, it is still a central part of faculty members’ responsibilities. In those cases, where research is not funded by extramural funds, it is therefore being implicitly funded by the university itself. In some fields, the sciences in particular, outside funding is the norm; in others, the humanities and most social sciences, it is the exception. Regardless of the field, faculty research that is not funded from outside is university-funded research, and the university ought to be responsible for funding its dissemination as well.

The university can and should place conditions on funding that dissemination. In particular, it ought to require that if it is funding the dissemination, then that dissemination be open – free for others to read and build on – and that it be published in a venue that provides openness sustainably – a fully open-access journal rather than a hybrid subscription journal.

Organizing a university open-access fund consistent with these principles means that the university will, at present, fund few articles, for reasons detailed elsewhere. Don’t confuse slow uptake with low impact. The import of the fund is not to be measured by how many articles it makes open, but by how it contributes to the establishment of the enabling environment for the open-access business model. The enabling environment will have to grow substantially before enablement becomes transformation. It is no less important in the interim.

What about the opportunity cost of open-access funds? Couldn’t those funds be better used in our efforts to move to a more open scholarly communication system? Alternative uses of the funds are sometimes proposed, such as university libraries establishing and operating new open-access journals or paying membership fees to open-access publishers to reduce the author-side fees for their journals. But establishing new journals does nothing to reduce the need to subscribe to the old journals. It adds costs with no anticipation, even in the long term, of corresponding savings elsewhere. And paying membership fees to certain open-access publishers puts a finger on the scale so as to preemptively favor certain such publishers over others and to let funding agencies off the hook for their funding responsibilities. Such efforts should at best be funded after open-access funds are established to make good on universities’ responsibility to underwrite the dissemination of the research they’ve funded.

- It should go without saying that efforts to foster open-access journal publishing are completely consistent with, in fact aided by, fostering open access through self-deposit in open repositories (so-called “green open access”). I am a long and ardent supporter of such efforts myself, and urge you as university administrators and librarians to promote green open access as well. [Since it should go without saying, comments recapitulating that point will be deemed tangential and attended to accordingly.]↩

- I am indebted to Bernard Schutz of Max Planck Gesellschaft for his elegant phrasing of the issue in terms of the “enabling environment”.↩

- Furthermore, as I’ve argued elsewhere, disenfranchising readers through subscription fees is a more fundamental problem than disenfranchising authors through publication fees.↩

- In fact, by being willing to fund author-side fees for grant-funded articles, universities merely delay the day that funding agencies do their part by reducing the pressure from their fundees.↩

A document scanning smartphone handle

March 13th, 2014

|

| …my solution to the problem… (Demonstrating the Scan-dle to my colleagues from the OSC over a beer in a local pub. Photo: Reinhard Engels) |

They are at the end of the gallery; retired to their tea and scandal, according to their ancient custom.

For a project that I am working on, I needed to scan some documents in one of the Harvard libraries. Smartphones are a boon for this kind of thing, since they are highly portable and now come with quite high-quality cameras. The iPhone 5 camera, for instance, has a resolution of 3,264 x 2,448, which comes to about 300 dpi scanning a letter-size sheet of paper, and a brightness depth of 8 bits per pixel provides an effective resolution much higher.

The downside of a smartphone, and any handheld camera, is the blurring that inevitably arises from camera shake when holding the camera and pressing the shutter release. True document scanners have a big advantage here. You could use a tripod, but dragging a tripod into the library is none too convenient, and staff may even disallow it, not to mention the expense of a tripod and smartphone tripod mount.

My solution to the problem of stabilizing my smartphone for document scanning purposes is a kind of document scanning smartphone handle that I’ve dubbed the Scan-dle. The stabilization that a Scan-dle provides dramatically improves the scanning ability of a smartphone, yet it’s cheap, portable, and unobtrusive.

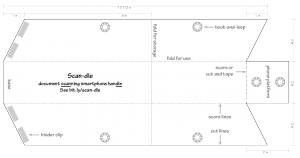

The Scan-dle is essentially a triangular cross-section monopod made from foam board with a smartphone platform at the top. The angled base tilts the monopod so that the smartphone’s camera sees an empty area for the documents.[1] Judicious use of hook-and-loop fasteners allows the Scan-dle to fold small and flat in a couple of seconds.

The plans at right show how the device is constructed. Cut from a sheet of foam board the shape indicated by the solid lines. (You can start by cutting out a 6″ x 13.5″ rectangle of board, then cutting out the bits at the four corners.)

The plans at right show how the device is constructed. Cut from a sheet of foam board the shape indicated by the solid lines. (You can start by cutting out a 6″ x 13.5″ rectangle of board, then cutting out the bits at the four corners.)  Then, along the dotted lines, carefully cut through the top paper and foam but not the bottom layer of paper. This allows the board to fold along these lines. (I recommend adding a layer of clear packaging tape along these lines on the uncut side for reinforcement.) Place four small binder clips along the bottom where indicated; these provide a flatter, more stable base. Stick on six 3/4″ hook-and-loop squares where indicated, and cut two 2.5″ pieces of 3/4″ hook-and-loop tape.

Then, along the dotted lines, carefully cut through the top paper and foam but not the bottom layer of paper. This allows the board to fold along these lines. (I recommend adding a layer of clear packaging tape along these lines on the uncut side for reinforcement.) Place four small binder clips along the bottom where indicated; these provide a flatter, more stable base. Stick on six 3/4″ hook-and-loop squares where indicated, and cut two 2.5″ pieces of 3/4″ hook-and-loop tape.

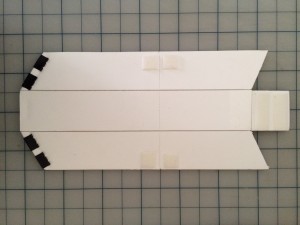

When the board is folded along the “fold for storage” line (see image at left), you can use the tape pieces to hold it closed and flat for storage.

When the board is folded along the “fold for storage” line (see image at left), you can use the tape pieces to hold it closed and flat for storage.  When the board is folded along the two “fold for use” lines (see image at right), the same tape serves to hold the board together into its triangular cross section. Hook-and-loop squares applied to a smartphone case hold the phone to the platform.

When the board is folded along the two “fold for use” lines (see image at right), the same tape serves to hold the board together into its triangular cross section. Hook-and-loop squares applied to a smartphone case hold the phone to the platform.

To use the Scan-dle, hold the base to a desk with one hand and operate the camera’s shutter release with the other, as shown in the video below. An additional trick for iPhone users is to use the volume buttons on a set of earbuds as a shutter release for the iPhone camera, further reducing camera shake.

The Scan-dle has several nice properties:

- It is made from readily available and inexpensive materials. I estimate that the cost of the materials used in a single Scan-dle is less than $10, of which about half is the iPhone case. In my case, I had everything I needed at home, so my incremental cost was $0.

- It is extremely portable. It folds flat to 6″ x 7″ x .5″, and easily fits in a backpack or handbag.

- It sets up and breaks down quickly. It switches between its flat state and ready-to-go in about five seconds.

- It is quite sufficient for stabilizing the smartphone.

The scanning area covered by a Scan-dle is about 8″ x 11″, just shy of a letter-size sheet. Of course, you can easily change the device’s height in the plans to increase that area. But I prefer to leave it short, which improves the resolution in scanning smaller pages. When a larger area is needed you can simply set the base of the Scan-dle on a book or two. Adding just 1.5″ to the height of the Scan-dle gives you coverage of about 10″ x 14″. By the way, after you’ve offloaded the photos onto your computer, programs like the freely available Scantailor can do a wonderful job of splitting, deskewing, and cropping the pages if you’d like.

Let me know in the comments section if you build a Scan-dle and how it works for you, especially if you come up with any use tips or design improvements.

Materials:

(Links are for reference only; no need to buy in these quantities.)

- foam board

- double-sided Velcro tape

- sticky-back Velcro coins

- clear packaging tape

- 3/4″ binder clips

- iPhone case

The design bears a resemblance to a 2011 Kickstarter-funded document scanner attachment called the Scandy, though there are several differences. The Scandy was a telescoping tube that attached with a vise mount to a desk; the Scan-dle simplifies by using the operator’s hand as the mount. The Scandy’s telescoping tube allowed the scan area to be sized to the document; the Scan-dle must be rested on some books to increase the scan area. Because of its solid construction, the Scandy was undoubtedly slightly heavier and bulkier than the Scan-dle. The Scandy cost some €40 ($55); the Scan-dle comes in at a fraction of that. Finally, the Scandy seems no longer to be available; the open-source Scan-dle never varies in its availability. ↩

The design bears a resemblance to a 2011 Kickstarter-funded document scanner attachment called the Scandy, though there are several differences. The Scandy was a telescoping tube that attached with a vise mount to a desk; the Scan-dle simplifies by using the operator’s hand as the mount. The Scandy’s telescoping tube allowed the scan area to be sized to the document; the Scan-dle must be rested on some books to increase the scan area. Because of its solid construction, the Scandy was undoubtedly slightly heavier and bulkier than the Scan-dle. The Scandy cost some €40 ($55); the Scan-dle comes in at a fraction of that. Finally, the Scandy seems no longer to be available; the open-source Scan-dle never varies in its availability. ↩

Processing special collections: An archivist’s workstation

May 29th, 2012

|

| John Tenniel, c. 1864. Study for illustration to Alice’s adventures in wonderland. Harcourt Amory collection of Lewis Carroll, Houghton Library, Harvard University. |

We’ve just completed spring semester during which I taught a system design course jointly in Engineering Sciences and Computer Science. The aim of ES96/CS96 is to help the students learn about the process of solving complex, real-world problems — applying engineering and computational design skills — by undertaking an extended, focused effort directed toward an open-ended problem defined by an interested “client”.

The students work independently as a self-directed team. The instructional staff provides coaching, but the students do all of the organization and carrying out of the work, from fact-finding to design to development to presentation of their findings.

This term the problem to be addressed concerned the Harvard Library’s exceptional special collections, vast holdings of rare books, archives, manuscripts, personal documents, and other materials that the library stewards. Harvard’s special collections are unique and invaluable, but are useful only insofar as potential users of the material can find and gain access to them. Despite herculean efforts of an outstanding staff of archivists, the scope of the collections means that large portions are not catalogued, or catalogued in insufficient detail, making materials essentially unavailable for research. And this problem is growing as the cataloging backlog mounts. The students were asked to address core questions about this valuable resource: What accounts for this problem at its core? Can tools from computer science and technology help address the problems? Can they even qualitatively improve the utility of the special collections?

The students’ recommendations centered around the design, development, and prototyping of an “archivist’s workstation” and the unconventional “flipped” collections processing that the workstation enabled. Their process involves exhaustive but lightweight digitization of a collection as a precursor to highly efficient metadata acquisition on top of the digitized images, rather than the conventional approach of digitizing selectively only after all processing of the collection is performed. The “digitize first” approach means that documents need only be touched once, with all of the sorting, arrangement, and metadata application being performed virtually using optimized user interfaces that they designed for these purposes. The output is a dynamic finding aid with images of all documents, complete with search and faceted browsing of the collection, to supplement the static finding aid of traditional archival processing. The students estimate that processing in this way would be faster than current methods, while delivering a superior result. Their demo video (below) gives a nice overview of the idea.

The deliverables for the course are now available at the course web site, including the final report and a videotape of their final presentation before dozens of Harvard archivists, librarians, and other members of the community.

I hope others find the ideas that the students developed as provocative and exciting as I do. I’m continuing to work with some of them over the summer and perhaps beyond, so comments are greatly appreciated.

The new Harvard Library open metadata policy

April 27th, 2012

|

| “Old Books” photo by flickr user Iguana Joe, used by permission (CC-by-nc) |

Earlier this week, the Harvard Library announced its new open metadata policy, which was approved by the Library Board earlier this year, along with an initial two metadata releases. The policy is straightforward:

The Harvard Library provides open access to library metadata, subject to legal and privacy factors. In particular, the Library makes available its own catalog metadata under appropriate broad use licenses. The Library Board is responsible for interpreting this policy, resolving disputes concerning its interpretation and application, and modifying it as necessary.

The first releases under the policy include the metadata in the DASH repository. Though this metadata has been available through open APIs since early in the repository’s history, the open metadata policy makes clear the open licensing terms that the data is provided under.

The release of a huge percentage of the Harvard Library’s bibliographic metadata for its holdings is likely to have much bigger impact. We’ve provided 12 million records — the vast majority of Harvard’s bibliographic data — describing Harvard’s library holdings in MARC format under a CC0 license that requests adherence to a set of community norms that I think are quite reasonable, primarily calling for attribution to Harvard and our major partners in the release, OCLC and the Library of Congress.

OCLC in particular has praised the effort, saying it “furthers [Harvard’s] mandate from their Library Board and Faculty to make as much of their metadata as possible available through open access in order to support learning and research, to disseminate knowledge and to foster innovation and aligns with the very public and established commitment that Harvard has made to open access for scholarly communication. I’m pleased to say that they worked with OCLC as they thought about the terms under which the release would be made.” We’ve gotten nice coverage from the New York Times, Library Journal, and Boing Boing as well.

Many people have asked what we expect people to do with the data. Personally, I have no idea, and that’s the point. I’ve seen over and over that when data is made openly available with the fewest impediments — legal and technical — people are incredibly creative about finding innovative uses for the data that we never could have predicted. Already, we’re seeing people picking up the data, exploring it, and building on it.

- The Digital Public Library of America is making the data available through an API that provides data in a much nicer way than the pure MARC record dump that Harvard is making available.

- Within hours of release, Benjamin Bergstein had already set up his own search interface to the Harvard data using the DPLA API.

- Carlos Bueno has developed code for the Harvard Library Bibliographic Dataset to parse its “wonky” MARC21 format, and has open-sourced the code.

- Alf Eaton has documented his own efforts to work with the bibliographic dataset, providing instructions for downloading and extracting the records and putting up all of the code he developed to massage and render the data. He outlines his plans for further extensions as well.

(I’m sure I’ve missed some of the ways people are using the data. Let me know if you’ve heard of others, and I’ll update this list.)

As I’ve said before, “This data serves to link things together in ways that are difficult to predict. The more information you release, the more you see people doing innovative things.” These examples are the first evidence of that potential.

John Palfrey, who was really the instigator of the open metadata project, has been especially interested in getting other institutions to make their own collection metadata publicly available, and the DPLA stands ready to help. They’re running a wiki with instructions on how to add your own institution’s metadata to the DPLA service.

It’s hard to list all the people who make initiatives like this possible, since there are so many, but I’d like to mention a few major participants (in addition to John): Jonathan Hulbert, Tracey Robinson, David Weinberger, and Robin Wendler. Thanks to them and the many others that have helped in various ways.

Is the pot calling the kettle black?

February 25th, 2012

|

| “…the interpersonal processes that a student goes through…” Harvard students (2008) by E>mar via flickr. Used by permission (CC by-nc-nd) |

Is the pot calling the kettle black? Oh sure, journal prices are going up, but so is tuition. How can universities complain about journal price hyperinflation if tuition is hyperinflating too? Why can’t universities use that income stream to pay for the rising journal costs?

There are several problems with this argument, above and beyond the obvious one that two wrongs don’t make a right.

First, tuition fees aren’t the bulk of a university’s revenue stream. So even if it were true that tuition is hyperinflating at the pace of journal prices, that wouldn’t mean that university revenues were keeping pace with journal prices.

Second, a journal is a monopolistic good. If its price hyperinflates, buyers can’t go elsewhere for a substitute; it’s pay or do without. But a college education can be arranged for at thousands of institutions. Students and their families can and do shop around for the best bang for the buck. (Just do a search for “best college values” for the evidence.) In economists’ parlance, colleges are economic substitutes. So even if it were true that tuition at a given college is hyperinflating at the pace of journal prices, individual students can adjust accordingly. As the College Board says in their report on “Trends in College Pricing 2011”:

Neither changes in average published prices nor changes in average net prices necessarily describe the circumstances facing individual students. There is considerable variation in prices across sectors and across states and regions as well as among institutions within these categories. College students in the United States have a wide variety of educational institutions from which to choose, and these come with many different price tags.

Third, a journal article is a pure information good. What you buy is the content. Pure information goods include things like novels and music CDs. They tend to have high fixed costs and low marginal costs, leading to large economies of scale. But a college education is not a pure information good. Sure, you are paying in part to acquire some particular knowledge, say, by listening to a lecture. But far more important are the interpersonal processes that a student participates in: interacting with faculty, other instructional staff, librarians, other students, in their dormitories, labs, libraries, and classrooms, and so forth. It is through the person-to-person hands-on interactions that a college education develops knowledge, skills, and character.

This aspect of college education has high marginal costs. One would not expect it to exhibit the economies of scale of a pure information good. So even if it were true that tuition is hyperinflating at the pace of journal prices, that would not take the journals off the hook; they should be able to operate with much higher economies of scale than a college by virtue of the type of good they are.[1]

Which makes it all the more surprising that the claims about college tuition hyperinflating at the rate of journals are, as it turns out, just plain false.

Let’s look at what the average Harvard College student pays for his or her education. Read the rest of this entry »

Conan Doyle on the prevention of cruelty to books

December 1st, 2011

|

| “…dog-eared in thirty-one places…” |

I’ve been reading Arthur Conan Doyle‘s first novel, The Narrative of John Smith, just published for the first time by the British Library. It’s no The Adventures of Sherlock Holmes, that’s for sure. For one thing, he seems to have left out any semblance of plot. But it does incorporate some entertaining pronouncements. Here’s one I identify with highly:

There should be a Society for the Prevention of Cruelty to Books. I hate to see the poor patient things knocked about and disfigured. A book is a mummified soul embalmed in morocco leather and printer’s ink instead of cerecloths and unguents. It is the concentrated essence of a man. Poor Horatius Flaccus has turned to an impalpable powder by this time, but there is his very spirit stuck like a fly in amber, in that brown-backed volume in the corner. A line of books should make a man subdued and reverent. If he cannot learn to treat them with becoming decency he should be forced.

If a bibliophile House of Commons were to pass a ‘Bill for the better preservation of books’ we should have paragraphs of this sort under the headings of ‘Police Intelligence’ in the newspapers of the year 2000: ‘Marylebone Police Court. Brutal outrage upon an Elzevir Virgil. James Brown, a savage-looking elderly man, was charged with a cowardly attack upon a copy of Virgil’s poems issued by the Elzevir press. Police Constable Jones deposed that on Tuesday evening about seven o’clock some of the neighbours complained to him of the prisoner’s conduct. He saw him sitting at an open window with the book in front of him which he was dog-earing, thumb-marking and otherwise ill using. Prisoner expressed the greatest surprise upon being arrested. John Robinson, librarian of the casualty section of the British Museum, deposed to the book, having been brought in in a condition which could only have arisen from extreme violence. It was dog-eared in thirty-one places, page forty-six was suffering from a clean cut four inches long, and the whole volume was a mass of pencil — and finger — marks. Prisoner, on being asked for his defence, remarked that the book was his own and that he might do what he liked with it. Magistrate: “Nothing of the kind, sir! Your wife and children are your own but the law does not allow you to ill treat them! I shall decree a judicial separation between the Virgil and yourself: and condemn you to a week’s hard labour.” Prisoner was removed, protesting. The book is doing well and will soon be able to quit the museum.’

Portrait of Arthur Conan Doyle by Sidney Paget, c. 1890 What a wonderful, wonderful thing it is, though use has dulled our admiration of it! Here are all these dead men lurking inside my oaken case, ready to come out and talk to me whenever I may desire it. Do I wish philosophy? Here are Aristotle, Plato, Bacon, Kant and Descartes, all ready to confide to one their very inmost thoughts upon a subject which they have made their own. Am I dreamy and poetical? Out come Heine and Shelley and Goethe and Keats with all their wealth of harmony and imagination. Or am I in need of amusement on the long winter evenings? You have but to light your reading lamp and beckon to any one of the world’s great storytellers, and the dead man will come forth and prattle to you by the hour. That reading-lamp is the real Aladdin’s wonder for summoning the genii with. Indeed, the dead are such good company that one is apt to think too little of the living.

I know that there are those who think it is a sign of appreciation to write in, dog-ear, underline, highlight, and otherwise modify books — Anne Fadiman lauds such things as carnal acts — but I can’t bring myself to do so. I just can’t.

The future of the library, expressed in sculpture

October 13th, 2011

|

| Petrus Spronk, “Architectural Fragment”, 1992. Photo © 2005 Robert Laddish (www.laddish.net), used by permission. |

I’ve just been at the conference in honor of the 30th anniversary of the University of Sao Paulo Integrated Library System (SIBi USP). David Palmer, one of the speakers at the conference, used in his presentation a picture of a wonderful sculpture that I had never seen before, which turned out to be a public art piece at the State Library of Victoria in Melbourne, Australia by Petrus Spronk entitled “Architectural Fragment”. I place a couple of pictures of it here in honor of Spronk’s 72nd birthday, which happens to be today. You can find more images here.

|

| Petrus Spronk, “Architectural Fragment”, 1992. Photo by flickr user madam3181, used by permission (CC by-nc-nd). |

The Scouring of the White Horse

April 18th, 2011

The owld White Harse wants zettin to rights

And the Squire hev promised good cheer,

Zo we’ll gee un a scrape to kip un in zhape,

And a’ll last for many a year.

— Thomas Hughes, The Scouring of the White Horse, 1859

On a recent trip to London, I had an extra day free, and decided to visit the Uffington White Horse with a friend. The Uffington White Horse is one of the most mysterious human artifacts on the planet. In the south of Oxfordshire, less than two hours west of London by Zipcar, it sits atop White Horse Hill in the Vale of White Horse to which it gives its name. It is the oldest of the English chalk figures, which are constructed by removing turf and topsoil to reveal the chalk layer below.

|

| The Uffington White Horse, photo by flickr user superdove, used by permission |

The figure is sui generis in its magnificence, far surpassing any of the other hill figures extant in England. The surrounding landscape — with its steep hills, the neighboring Roman earthworks castle, and pastoral lands still used for grazing sheep and cows — is spectacular.

The Uffington horse is probably best known for its appearance in Thomas Hughes’s 1857 novel Tom Brown’s Schooldays. The protagonist Tom Brown, like Hughes himself, hails from Uffington, and Hughes uses that fact as an excuse to spend a few pages detailing the then-prevalent theory of the origin of the figure, proposed by Francis Wise in 1738, that the figure was carved into the hill in honor of King Æthelred’s victory over the Danes there in 871.[1]

As it turns out, in a triumph of science over legend, Oxford archaeologists have dated the horse more accurately within the last twenty years. They conclude that the trenches were originally dug some time between 1400 and 600 BCE, making the figure about three millennia old.[2]

How did the figure get preserved over this incredible expanse of time? The longevity of the horse is especially remarkable given its construction. The construction method is a bit different from its popular presentation as a kind of huge shallow intaglio, revealing the chalk substrate. Instead it is constructed as a set of trenches dug several feet deep and backfilled with chalk. Nonetheless, over time, dirt will overfill the chalk areas and grass will encroach. Over a period of decades, this process leads chalk figures to become “lost”. In fact, several lost chalk figures in England are known of.

Chalk figures thus require regular maintenance to prevent overgrowing. Thomas Baskerville[3] captures the alternatives: “some that dwell hereabout have an obligation upon their lands to repair and cleanse this landmark, or else in time it may turn green like the rest of the hill and be forgotten.”

|

| Figure from Hughes’s The Scouring of the White Horse depicting the 1857 scouring. From the 1859 Macmillan edition. |

This “repairing and cleansing” has been traditionally accomplished through semi-regular celebrations, called scourings, occurring at approximately decade intervals, in which the locals came together in a festival atmosphere to clean and repair the chalk lines, at the same time participating in competitions, games, and apparently much beer. Hughes’s 1859 book The Scouring of the White Horse is a fictionalized recounting of the 1857 scouring that he attended.[4]

These days, the regular maintenance of the figure has been taken over by the National Trust, which has also arranged for repair of vandalism damage and even for camouflaging of the figure during World War II.

|

| The author at the Uffington White Horse, 19 March 2011, with Dragon Hill in the background. Note the beginnings of plant growth on the chalk substrate. |

Thus, the survival of the Uffington White Horse is witness to a continuous three millennium process of active maintenance of this artifact. As such, it provides a perfect metaphor for the problems of digital preservation. (Ah, finally, I get to the connection with the topic at hand.) We have no precedent for long-term preservation of interpretable digital objects. Unlike books printed on acid-free paper, which survive quite well in a context of benign neglect, but quite like the White Horse, bits degrade over time. It requires a constant process of maintenance and repair — mirroring,[5] verification, correction, format migration — to maintain interpretable bits over time scales longer than technology-change cycles. By coincidence, those time scales are about commensurate with the time scales for chalk figure loss, on the order of decades.

The tale of the Uffington White Horse provides some happy evidence that humanity can, when sufficiently motivated to establish appropriate institutions, maintain this kind of active process over millennia, but also serves as a reminder of the kind of loss we might see in the absence of such a process. The figure is to my knowledge the oldest extant human artifact that has survived due to continual maintenance. In recognition of this, I propose that we adopt as an appropriate term for the regular processes of digital preservation “the scouring of the White Horse”.

[A shout out to the publican at Uffington’s Fox and Hounds Pub for the lunch and view of White Horse Hill after our visit to the horse.]

[1]Francis Wise, A Letter to Dr. Mead concerning some antiquities in Berkshire; Particularly shewing that the White Horse, which gives name to the Vale, is a Monument of the West Saxons, made in memory of great Victory obtained over the Danes A.D. 871, 1758.

[2]David Miles and Simon Palmer, “White Horse Hill,” Current Archaeology, volume 142, pages 372-378, 1995.

[3]Thomas Baskerville, The Description of Towns, on the Road from Faringdon to Bristow and Other Places, 1681.

[4]One of the salutary byproducts of the recent mass book digitization efforts is the open availability of digital versions of both Hughes books: through Open Library and Google Books.

[5]Interestingly, the Uffington White Horse has been “mirrored” as well, with replicas in Hogansville, GA, Juarez, Mexico, and Canberra, Australia.

Jonathan Eisen at The Tree of Life writes

If you need any more incentive to publish a paper in an Open Access manner if you have a choice – here is one. If you publish in a closed access journal of some kind, it is likely fewer and fewer colleagues will be able to get your paper as libraries are hurting big time and will be canceling a lot of subscriptions.

He’s absolutely right.[1] Eisen refers to a statement from his own university’s library (UC Davis), describing a major review and cancellation process. Charles Bailey has compiled public statements from seven ARL libraries (Cornell, Emory, MIT, UCLA, UTennessee, UWashington, Yale) about substantial cuts to their budgets. My own university will be experiencing substantial collections budget cuts in addition to major layoffs following on from the Harvard endowment drop of 30%. The Harvard libraries are not being spared.

The ARL has issued an open statement to publishers about the situation on behalf of their membership, 123 premier academic libraries in North America. They note that in addition to 2009 cancellations, “Most member libraries are preparing cancellations of ongoing commitments for 2010.”

Now more than ever, academic authors need to take responsibility for making sure that people can read what they write. Here’s a simple two-step process.

- Retain distribution rights for your articles by choosing a journal that provides for this or amending your copyright agreement with the journal (but don’t fall into the “don’t ask, don’t tell” trap).

- Place your articles in an open access repository.

As budgets get cut and cancellations mount, fewer and fewer people will be able to read (and benefit from and appreciate and cite) your articles unless you make them accessible.

[1]Except for the implication that your only recourse is an open-access journal. In addition to that route, you can also publish in a traditional subscription-based closed-access journal, so long as it allows, or you negotiate rights for, your distribution of the article. Most journals do allow this kind of self-archiving distribution.

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=6afd1075-881b-4231-bc25-653564079fa9)