I’m honored to give an invited talk on “Trust and the Smart City: a view from Project GenZAI (Moonshot R&D project)” @ RO-MAN 2021

I’m honored to give an invited talk on “Trust and the Smart City: a view from Project GenZAI (Moonshot R&D project)” @ RO-MAN 2021

The topic of the Ro-man conference this year was: trustworthiness and cooperation.

The workshop aims to show a smart city could be organized to enable trust and cooperation among humans, robots and AI agents. It was a stimulating workshop & I learned a lot about blockchain and decentralization etc.

Special thanks to Fabio P. Bonsignorio for his kind invitation!

Program

08:50 – 09:00 Welcome / Introduction – Önder Gürcan, Paris-Saclay University, CEA LIST, France

09:00 – 09:45 A Survey of Verification, Validation andTesting Solutions for Smart Contracts – Chaïmaa Benabbou and Önder Gürcan, Paris-Saclay University, CEA LIST, France

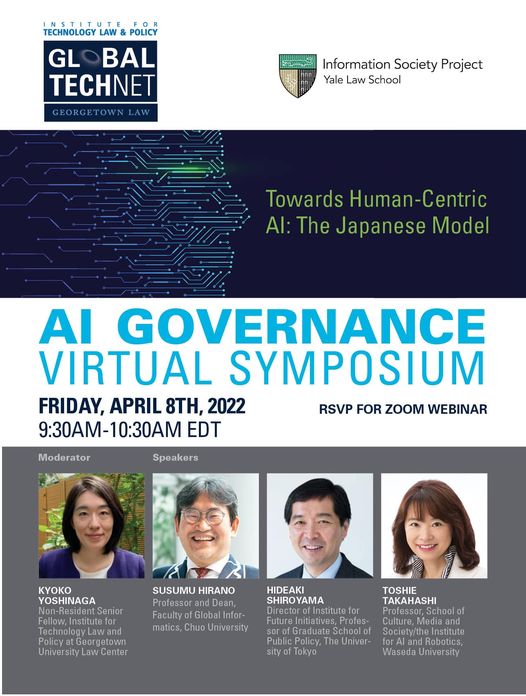

09:45 – 10:30 Trust and the Smart City: a view from Project GenZAI (Moonshot R&D project) – T. Takahashi, School of Culture, Media and Society / the Institute for AI and Robotics, Waseda University, Tokyo, Japan, Berkman Klein Center for Internet & Society, Harvard University, USA, and Leverhulme Centre for the Future of Intelligence, the University of Cambridge, UK.

10:30 – 11:05 Trustworthiness and cooperation – from home automation to citizens’ observatories – A. Kapitonov, ITMO University and AIRAlab, St.Petersburg, Russian Federation

11:05 – 11:40 Trust and cooperation in the financing 4th Industrial revolution- V. Bulatov, Merklebot, CA, USA 11:40 – 12:25 Cooperatives on the Blockchain (Decentralization of decisions for the real world) – J. Davila, SettleMint, Brussels, Belgium

12:25 – 12:50 COOPs of Humans, Robots and AIs for the Smart Cities and Countrysides of the future – F. Bonsignorio, Heron Robots, Genoa and Renaissance from the Countryside NGO, Imperia, Italy

12:50 – 13:20 Discussion / Closing – F. Bonsignorio