Since the early days of the discipline, computer scientists have always been interested in developing environments that exhibit well-understood and predictable behavior. If a computer system were to behave unpredictably, then we would look into the specifications and we would, in theory, be able to detect what went wrong, fix it, and move on. To this end, the World Wide Web, created by Tim Berners-Lee, was not expected to evolve into a system with unpredictable behavior. After all, the creation of WWW was enabled by three simple ideas: the introduction of the URL, a globally addressable system of files, the HTTP, a very simple communication protocol that allowed a computer to request and receive a file from another computer, and the HTML, a document-description language to simplify the development of documents that are easily readable by non-experts. Why, then, in a few years did we start to see the development of technical papers that included terms such as “propaganda” and “trust”?

Soon after its creation the web began to grow exponentially because anyone could add to it. Anyone could be an author, without any guarantee of quality. The exponential growth of the web necessitated the development of search engines (SEs) that gave us the opportunity to locate information fast. They grew so successful that they became the main providers of answers to any question one may have. It does not matter that several million documents may contain all the keywords we included in our query, a good search engine will give us all the important ones in its top-10 results. We have developed a deep trust in these search results because we have so often found them to be valuable — or, when they are not, we might not notice it.

As SEs became popular and successful, web spammers appeared. These are entities (people, organizations, businesses) who realized that they could exploit the trust that Web users placed in search engines. They would game the search engines manipulating the quality and relevance metrics so as to force their own content in the ever-important top-10 of a relevant search. SEs noticed this and a battle with the web spammers ensued: For every good idea that search engines introduced to better index and retrieve web documents, the spammers would come up with a trick to exploit the new situation. When the SEs introduced keyword frequency for ranking, the spammers came up with keyword stuffing (lots of repeating keywords to give the impression of high relevance); for web site popularity, they responded with link farms (lots of interlinked sites belonging to the same spammer); in response to the descriptive nature of anchor text they detonated Google bombs (use irrelevant keywords as anchor text to target a web site); and for the famous PageRank, they introduced mutual admiration societies (collaborating spammers exchanging links to increase everyone’s PageRank). In fact, one can describe the evolution of search results ranking technology as a response to web spamming tricks. And since for each spamming technique there is a corresponding propaganda, they became the propagandists in cyberspace.

In 2004, the first elements of misinformation revolving around elections began to emerge, and political advisers recognized that, even though the web was not a major component of electoral campaigns at the time, it would soon become so. If they could just “persuade” SEs to highly rank positive articles about their candidates, along with negative articles about their opponents, they could convince a few casual web users that their message was more valid and get their votes. Elections in the US, after all, often depend on a small number of closely contested races.

Search engines have certainly tried hard to limit the success of spammers, who are seen as exploiting its technology to achieve their goals. Search results were adjusted to be less easily spammable, even if that meant that some results were hand-picked rather than algorithmically produced. In fact, during the 2008 and 2010 elections, searching the web for electoral candidates yielded results that contained official entries first: The candidate’s campaign sites, the office sites, and Wikipedia entries topped the results, well above well-respected news organizations. The embarrassment of being gamed and of the infamous “miserable failure” Google bomb were not to be tolerated.

Around the same time, we saw the development of the social web, networks that allow people to connect, exchange ideas, air opinions, and keep up with their friends. The social web created opportunities both for spreading political (and other) messages, but also misinformation through spamming. In our research we have seen several examples of propagation of politically-motivated misinformation. During the important 2010 US Senate special election in Massachusetts (#masen), spammers used Twitter in order to create a Google bomb that brought their own messages to the third position of the top-10 results by frequently repeating the same tweet. They also created the first Twitter bomb targeting individuals interested in the #masen elections with misinformation about one of the candidates, and created a pre-fab tweet factory imitating a grass-roots campaign, attacking news organizations and reporters (a technique known as “astroturfing”).

Like propaganda in society, spam will stay with us in cyberspace. And as we increasingly look to the web for information, it is important that we are able to detect misinformation. Arguably, now is the most important time for such detection, since we do not currently have a system of trusted editors in cyberspace like those which have served us well in the past (e.g. newspapers, publishers, institutions). What can we do?

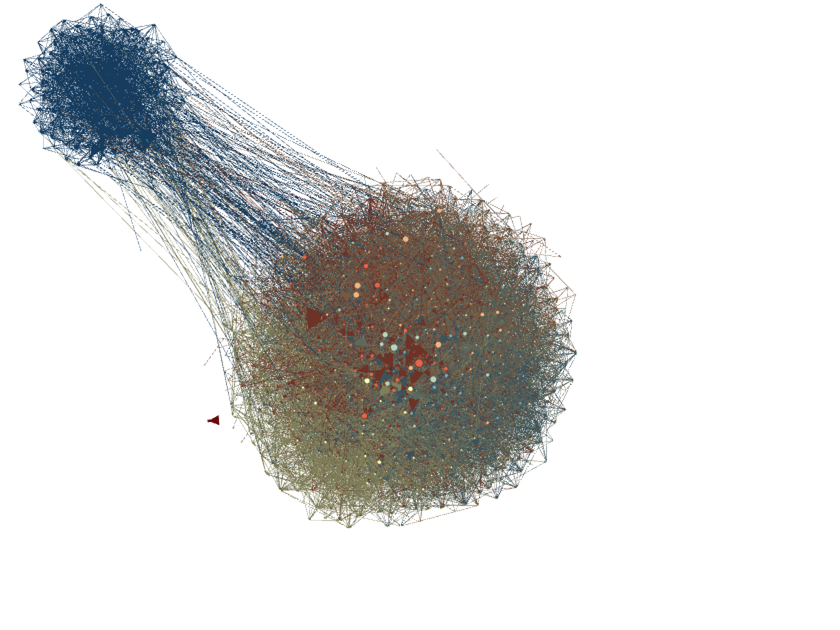

Retweeting reveals communities of likely-minded people: There are 2 larger groups that naturally arise when one considers the retweeting patterns of those tweeting during the 2010 MA special election. Sampling reveals that the smaller contains liberals and the larger conservatives. The larger one appears to consist of 3 different subgroups.

Some promising research in social media has shown potential in using technology to detect astroturfing. In particular, the following rules hold true for the most part:

(1) The credibility of the information you receive is related to the trust you have towards the original sender and to those who retweeted it;

(2) Not only do Twitter friends (those you follow) reveal a similarly-minded community, but their retweeting patterns also make these communities stronger and more visible;

(3) While both truths and lies propagate in cyberspace, lies have shorter life-spans and are questioned more often.

While we might prefer an automatic way of detecting misinformation based on algorithms, this will not happen. Citizens of cyberspace must become educated about how to detect misinformation, be provided with tools that help them question and verify information, and draw on the strengths of crowd sourcing through their own sets of trusted editors. This Berkman conference will hopefully help us push in that direction.

I think there’s a useful distinction to be maintained between misinformation and disinformation—the latter being false and misleading propaganda, the former being unintentionally-spread incorrect information. It’s interesting to contemplate the different dynamics these two streams of falsehood follow, and the ways they might interdepend, in a kind of ecosystem of truthiness.

You are making a good point about the distinction. I prefer to use “propaganda” instead of “disinformation” because it is a more widely recognized term. See the Ngram viewer about their relative usage: http://bit.ly/zsQVPv

It’s no different then news on TV . News is owned by Corporations with highly paid spokespersons who represent the interst of the company and it’s sponsors. Revenues and profit are the aim not necessarily the good of the society.

Sad I’m not there! Might be interesting to look at the use of propaganda in apparent effort to shift international perception on both Bahrain (or #Bahrain; where it was recently exposed that the government paid a US company to manipulate public opinion on the conflict) and Syria.

I can only imagine how Joseph Goebbels must be weeping right now, to see so many propaganda tools he would have given his eye teeth to have. And just as with the best of his propaganda, most people who witness what passes for “news” and “entertainment” today don’t even recognize it for what it is–or worse, do recognize it, and agree with it.

While so much of what’s on the airwaves qualifies as propaganda, an even more subtle effect is how few people remember that the first purpose of programming is to deliver consumers to advertisers.