VRM/Me2B developers shouldn’t have to wait for laws to pave the way through a wall-like status quo. (And we say that in our Privacy Manifesto.) But a good law or two should help.

That was I had hoped—even expected—the GDPR to do. Specifically, I called it “the world’s most heavily weaponized law protecting personal privacy,” said it was “aimed at companies that track people without asking” and that it would “blow away the (mostly US-based) surveillance economy, especially tracking-based ‘adtech,’ which supports most commercial publishing online.”

That hasn’t happened.

It has been sixteen months since the GDPR went into effect (May 2018), and violation of personal privacy online today remains as pervasive as ever. Worse, violators take advantage of a loophole* in the GDPR that allows them to continue tracking people by requiring (or appearing to require) “consent” to cookies and other means of tracking (so you can get “personalized,” “interest-based” or “relevant” advertising, the perpetrators say). As long as various EU countries’ Data Protection Authorities (who enforce the GDPR) fail to focus on simple fact that nearly every website and its third parties are doing the same bad things Google and Facebook are accused of doing, the practice will continue, and the GDPR will remain a failure at stopping widespread spying-based adtech.

Meanwhile, many privacy advocates in the U.S. (including me) have invested hope in the California Consumer Privacy Act (CCPA), which will go into effect on January 1, 2020. I invite you to visit the operative language in that law, starting here. As legalese goes, it’s remarkably readable. Meanwhile, Wikipedia compresses these rather well under the heading Intentions of the Act:

The intentions of the Act are to provide California residents with the right to:

- Know what personal data is being collected about them.

- Know whether their personal data is sold or disclosed and to whom.

- Say no to the sale of personal data.

- Access their personal data.

- Request a business to delete any personal information about a consumer collected from that consumer.

- Not be discriminated against for exercising their privacy rights.

Note that this presumes that nearly all agency resides on the data collectors’ side, and that the only agency possible on the individual’s side is asking to know or say no to what others who collected personal data can do with it.

That’s not enough.

Making matters worse is that we are mere “consumers” to the CCPA, “data subjects” to the GDPR and “users” to the computer industry—in each case with no more freedom and agency than what potential violators of our privacy (e.g. the websites and services of the world) separately grant us, through their countless, lengthy and infinitely varied privacy policies, terms and “agreements.”

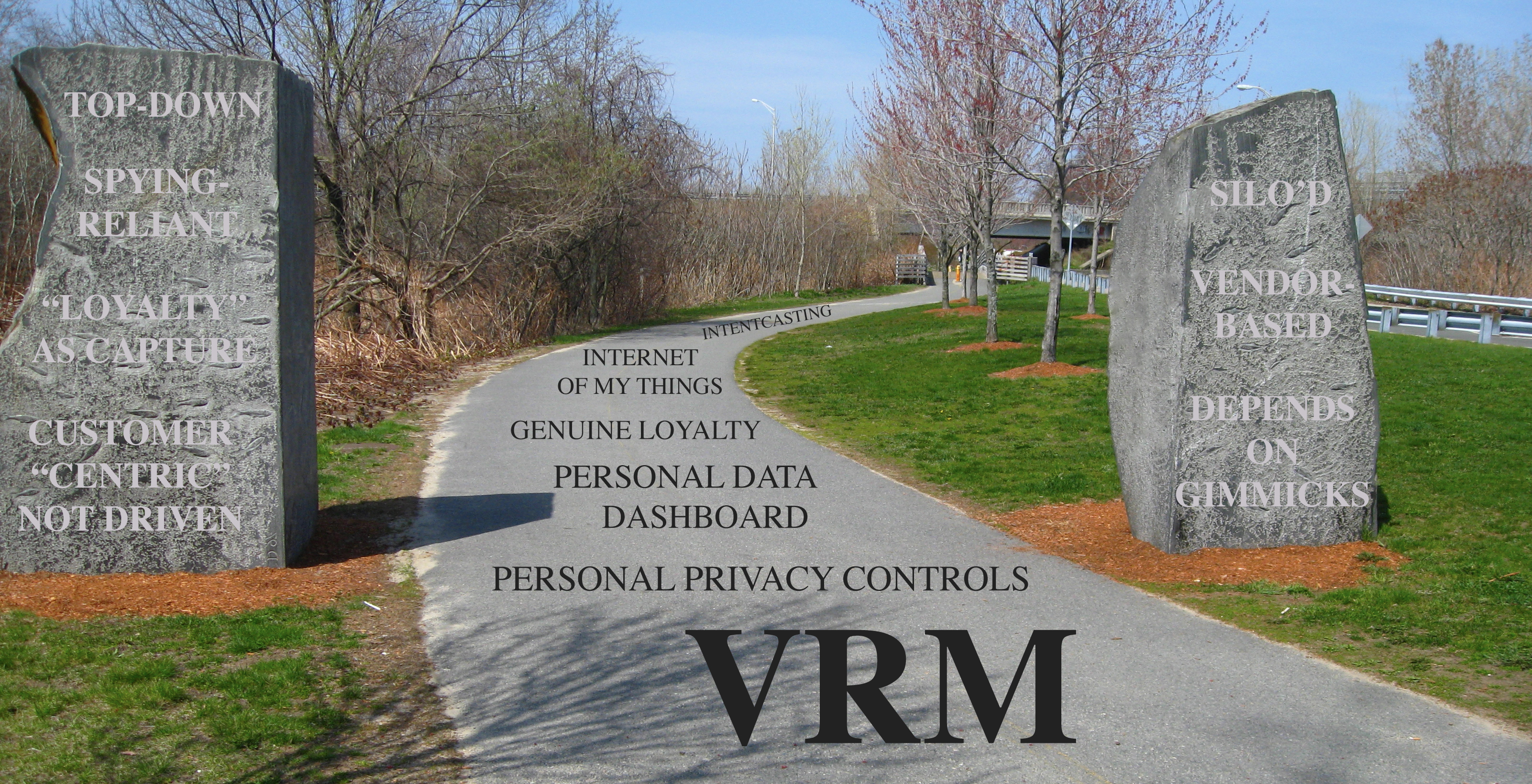

In other words, we’re still at Square Zero, and Square One is neither the CCPA nor the GDPR. Those are relevant in the ways that guard rails are relevant to a winding road; but we don’t have the road yet.

While I’ve made it clear elsewhere that we need tech more than policy (because tech of our own—VRM tech—gives us agency), it will sure help to have policy that guides the deployment of that tech.

So, what law might actually open the way for VRM development, preferably by simply giving individuals a new power they’ve been lacking, such as real control over just one aspect of their privacy: what Louis Brandeis and Samuel Warren called “the right to be let alone” when we’re online?

I like two.

First is the Do-Not-Track Act of 2019. It’s model legislation from DuckDuckGo, and explained this way:

When you turn on the setting in your browser that says “Do Not Track”, you probably expect to no longer be tracked on most websites you visit. Right? Well, you would be wrong. But don’t worry, you’re not alone.

Our recent study on the Do Not Track (DNT) browser setting indicated that about a quarter of people have turned on this setting, and most were unaware big sites do not respect it. That means approximately 75 million Americans, 115 million citizens of the European Union, and many more people worldwide are, right now, broadcasting a DNT signal.

All of these people are actively asking the sites they visit to not track them. Unfortunately, no law requires websites to respect your Do Not Track signals, and the vast majority of sites, including most all of the big tech companies, sadly choose to simply ignore them.

Let’s change that now. Let’s put teeth behind this widely used browser setting by making a law that would align with current consumer expectations and empower people to more easily regain control of their online privacy.

While DuckDuckGo actively supports the passing of strong, comprehensive privacy laws, we also recognize that it will take time for them to take effect worldwide. In the meantime, governments can provide immediate relief by enacting separate, simpler Do Not Track legislation.

It is extremely rare to have such an exciting legislative opportunity like this, where the hardest work — coordinated mainstream technical implementation and widespread consumer adoption — is already done.

That’s why we’re announcing draft legislation that can serve as a starting point for legislators in America and beyond. It’s entitled the “Do-Not-Track Act of 2019” and, if it were to be enacted, would require sites to respect the Do Not Track browser setting in this manner:

- No third-party tracking by default. Data brokers would no longer be legally able to use hidden trackers to slurp up your personal information from the sites you visit. And the companies that deploy the most trackers across the web — led by Google, Facebook, and Twitter — would no longer be able to collect and use your browsing history without your permission.

- No first-party tracking outside what the user expects. For example, if you use Whatsapp, its parent company (Facebook) wouldn’t be able to use your data from Whatsapp in unrelated situations (like for advertising on Instagram, also owned by Facebook). As another example, if you go to a weather site, it could give you the local forecast, but not share or sell your location history.

Under this proposed law, these restrictions would only come into play if a consumer has turned on the Do Not Track signal for their Internet traffic. To keep the Internet from breaking, these restrictions would have very narrowly tailored exceptions for debugging, auditing, security, non-commercial security research, and reporting, and further limited by mandated data-minimization requirements.

In particular, each of these narrow exceptions would only apply if a site adopts strict data-minimization practices, such as using the least amount of personal information needed, and anonymizing it whenever possible. And importantly, this draft legislation takes a more realistic view of what constitutes anonymous data vs. de-identified data. Legislators need to appreciate that users can be re-identified unless companies implement extra measures of protection.

Katherine Druckman and I also talked about this a bit with Gabriel Weinberg, CEO and founder of DuckDuckGo, in our Reality 2.0 podcast with him last month.

The other is Adrian Gropper‘s Patient Privacy Rights Information Governance Label. It says,

Patient Privacy Rights Information Governance Label August 19, 2019 Note: 0-to-5 of the boxes to be checked by the application, device, or service provider.1. No sharing: The data is never shared with any external entities. It is not even shared in de-identified form.

2. No aggregation: The data is never aggregated with other types of input or data from external sources. This includes mixing the data gathered via The Service with other data, such as patient-reported outcomes.

3. Always voluntary self-identification: The user of The Service is able to choose their own identity. The user does not need to have their identity verified unless required by law.

4. Digital agent support: The user is able to specify a digital agent, trustee, or equivalent information manager, and this specified agent will not be subject to certification or censorship.

5. No vendor lock-in: The Service is easily and conveniently substitutable, so the user can easily move their data to another vendor providing a similar service. This prevents vendor lock-in and is often accomplished using Open Standards. Indications for Use: The five separately self-asserted statements on the PPR Information Governance Label are subject to legal enforcement as would the privacy policy associated with The Service.

While not proposed as a law, it would be good to have a law that imposes those requirements, and leaves room for individuals to provide for exceptions, for example when they have working relationships with a service provider.

Maciej Ceglowski also has some good suggestions.

*Part 1 under Article 6 of the GDPR, covering the “Lawfulness of processing,” says, “Processing shall be lawful only if and to the extent that at least one of the following applies: (a) the data subject has given consent to the processing of his or her personal data for one or more specific purposes.” Hence the consent notices with an “accept” button in front of websites. These are most often presented as “cookie notices.” (Which are actually required by earlier EU law that was to some degree ignored until the GDPR came along.)

Whether a notice on the front of a website talks cookies or not, it usually means the site is obtaining your consent to being tracked “to personalize content and advertising” (or whatever) by spying on you. I’ve been told by GDPR experts that this really isn’t a loophole, and that most of these consent notices actually violate the GDPR’s letter and not just its spirit. Still, while that might be true, violation of the GDPR’s spirit remains normative.

Great post !