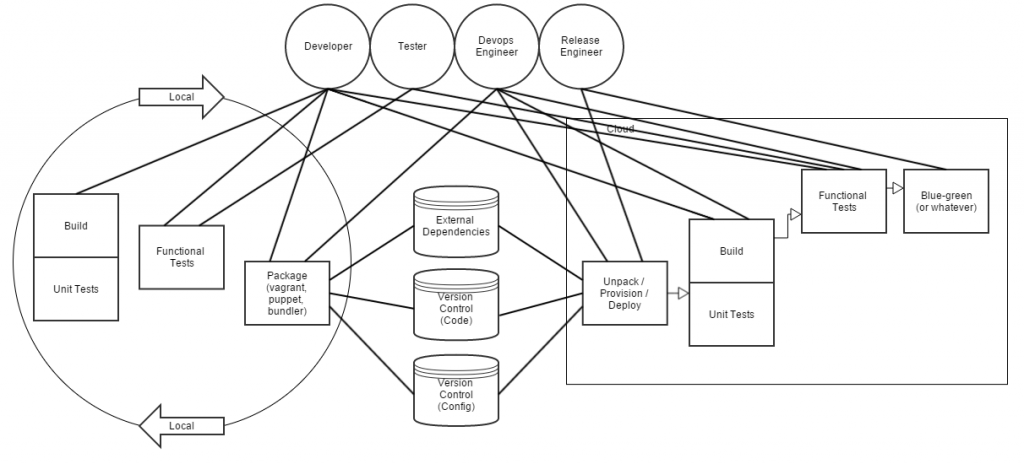

I was asked to take a look at a thought model for a Cloud Delivery Platform. I’m not going to post that graphic, because it wasn’t ready for prime time. It was confused and I’m not really sure what it was trying to show. So I created a bit of a diagram showing what I thought was important. (Just click on it, it doesn’t seem to fit right, and the next size down is too small to read.)

Halfway through I realized I’m not terribly good at making these things. I’ll get better. But there’s a few things I wanted to draw attention to in this graphic.

The Roles

This was the most important thing to me is to highlight the crossover of roles for each step. There are few steps in the process that should be perfomed by a single role. Devops is about communication.

Local Development

Coding, building, testing, packaging are all things that should be done at the same time. You don’t code 1000 lines then build. You build with every small change. In the same way, you should have tests written with the small increments. And that gets extended to “packaging”.

Packaging

I’m probably missing a very important word in my vocabulary here, but I’m going to go easy on myself, it’s late. What I’m meaning here is the process of setting up an image, or a deployable product. The configuration that will collect external dependencies and run tests when it gets to the deployed environment. This should all be done locally first. It should all be put into version control, separate from the application code. And this needs to be done in collaboration between the developer, who knows the application, and operations (or as they’ve been renamed in my organization, “Devops Engineers”) those who know how to package things appropriately.

Local Concurrence vs Cloud Linearity

Why isn’t spell check underlining “linearity”. That can’t be real. As I mentioned before, the things done locally are all done at the same time (build, test, package). That’s all just development. When it gets to the cloud, it’s a done product. Nothing should be further developed there. Nothing should be getting reworked. Everything needs to come entirely from Version Control and no manual finagling. (obviously?) So the cloud portion is linear. Everything happens in order. If it fails at any point, it’s sent back to local dev and fixed there.

Maybe this is all so obvious, it doesn’t need to be said. Maybe I’d feel better if it were just said anyway.