I subscribe to Vanity Fair. I also get one of its newsletters, replicated on a website called The Hive. At the top of the latest Hive is this come-on: “For all that and more, don’t forget to sign up for our metered paywall, the greatest innovation since Nitroglycerin, the Allman Brothers, and the Hangzhou Grand Canal.”

When I clicked on the metered paywall link, it took me to a plain old subscription page. So I thought, “Hey, since they have tracking cruft appended to that link, shouldn’t it take me to a page that says something like, “Hi, Doc! Thanks for clicking, but we know you’re already a paying subscriber, so don’t worry about the paywall”?

So I clicked on the Customer Care link to make that suggestion. This took me to a login page, where my password manager filled in the blanks with one of my secondary email addresses. That got me to my account, which says my Condé Nast subscriptions look like this:

Oddly, the email address at the bottom there is my primary one, not the one I just logged in with. (Also oddly, I still get Wired.)

So I went to the Vanity Fair home page, found myself logged in there, and clicked on “My Account.” This took me to a page that said my email address was my primary one, and provided a way to change my password, to subscribe or unsubscribe to four newsletters, and a way to “Receive a weekly digest of stories featuring the players you care about the most.” The link below said “Start following people.” No way to check my account itself.

So I logged out from the account page I reached through the Customer Care link, and logged in with my primary email address, again using my password manager. That got me to an account page with the same account information you see above.

It’s interesting that I have two logins for one account. But that’s beside more important points, one of which I made with this message I wrote for Customer Care in the box provided for that:

Curious to know where I stand with this new “metered paywall” thing mentioned in the latest Hive newsletter. When I go to the link there — https://subscribe.condenastdigital.com/subscribe/splits/vanityfair/ — I get an apparently standard subscription page. I’m guessing I’m covered, but I don’t know. Also, even as a subscriber I’m being followed online by 20 or more trackers (reports Privacy Badger), supposedly for personalized advertising purposes, but likely also for other purposes by Condé Nast’s third parties. (Meaning not just Google, Facebook and Amazon, but Parsely and indexww, which I’ve never heard of and don’t trust. And frankly I don’t trust those first three either.) As a subscriber I’d want to be followed only by Vanity Fair and Condé Nast for their own service-providing and analytic purposes, and not by who-knows-what by all those others. If you could pass that request along, I thank you. Cheers, Doc

When I clicked on the Submit button, I got this:

An error occurred while processing your request.An error occurred while processing your request.

Please call our Customer Care Department at 1-800-667-0015 for immediate assistance or visit Vanity Fair Customer Care online.

Invalid logging session ID (lsid) passed in on the URL. Unable to serve the servlet you’ve requested.

So there ya go: one among .X zillion other examples of subscription hell, differing only in details.

Fortunately, there is a better way. Read on.

The Path

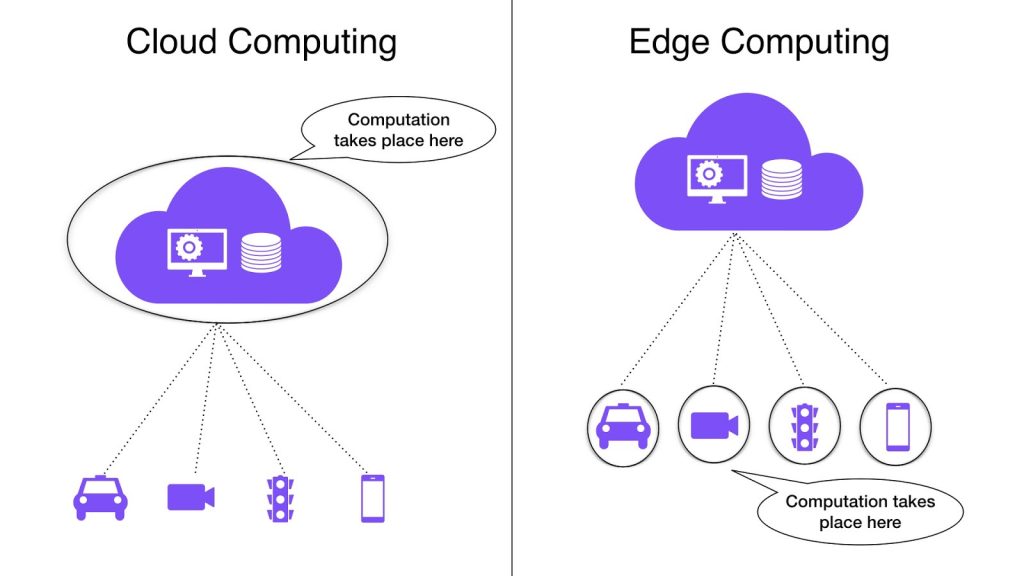

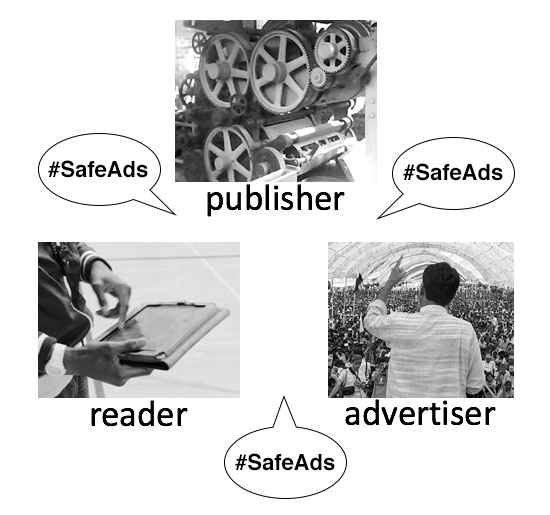

The only way to pave a path from subscription and customer service hell to the heaven we’ve never had is by normalizing the ways both work, across all of business. And we can only do this from the customer’s side. There is no other way. We need standard VRM tools to deal with the CRM and CX systems that exist on the providers’ side.

We’ve done this before.

We fixed networking, publishing and mailing online with the simple and open standards that gave us the Internet, the Web and email. All those standards were easy for everyone to work with, supported boundless economic and social benefits, and began with the assumption that individuals are full-privilege agents in the world.

The standards we need here should make each individual subscriber the single point of integration for their own data, and the responsible party for changing that data across multiple entities. (That’s basically the heart of VRM.)

This will give each of us a single way to see and manage many subscriptions, see notifications of changes by providers, and make changes across the board with one move. VRM + CRM.

The same goes for customer care service requests. These should be normalized the same way.

In the absence of normalizing how people manage subscription and customer care relationships, all the companies in the world with customers will have as many different ways of doing both as there are companies. And we’ll languish in the login/password hell we’re in now.

The VRM+CRM cost savings to those companies will also be enormous. For a sense of that, just multiply what I went through above by as many people there are in the world with subscriptions, and multiply that result by the number of subscriptions those people have — and then do the same for customer service.

We can’t fix this inside the separate CRM systems of the world. There are too many of them, competing in too many silo’d ways to provide similar services that work differently for every customer, even when they use the same back-ends from Oracle, Salesforce, SugarCRM or whomever.

Fortunately, CRM systems are programmable. So I challenge everybody who will be at Salesforce’s Dreamforce conference next week to think about how much easier it will be when individual customers’ VRM meets Salesforce B2B customers’ CRM. I know a number of VRM people who will be there, including Iain Henderson, of the bonus link below. Let me know you’re interested and I’ll make the connection.

And come work with us on standards. Here’s one.

Bonus link: Me-commerce — from push to pull, by Iain Henderson (@iaianh1)

From the Google AI blog: Towards a Conversational Agent that Can Chat About…Anything:

From the Google AI blog: Towards a Conversational Agent that Can Chat About…Anything: